Well, here I am. 1AM and crawling, not home from a bar, but on my desktop PC in my office. I don’t usually stay up late, but I am drinking coffee and it would probably take an act of God to get me off of my PC right now.

Screaming Frog and others

If the website you are crawling is your own, you have several options including DeepCrawl, Botify and Nutch. I have never personally used these so I can not vouch for any of them but it appears that they have their own pros and cons and are all great.

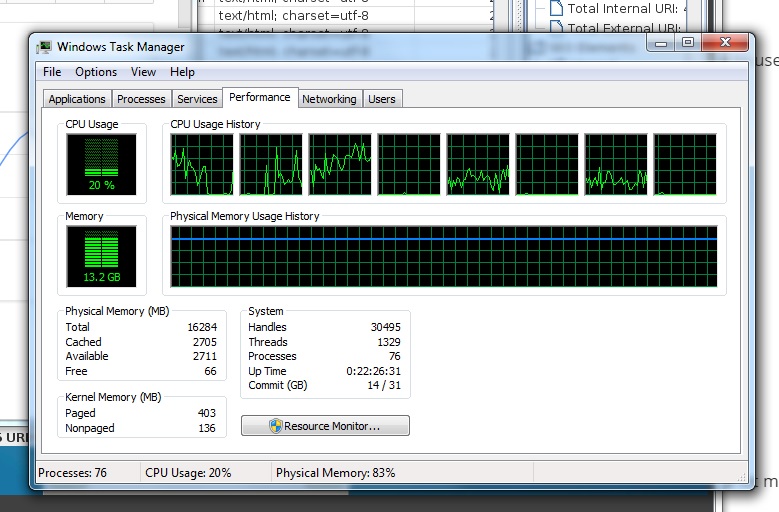

If you do not have access to the website you’re crawling, you will be probably be using Xenu or Screaming Frog. I’ve been a fan of Screaming Frog SEO Spider for years. It runs on Linux, PC & Mac. Today I quickly discovered that my PC is out of date. Which is amazing as I’m running an AMD Eight Core CPU with 20TB of space. It isn’t my CPU or hard drive though, it is my RAM and bandwidth. My 16GB ram was eaten quick. You can indeed use Screaming Frog on a PC to crawl if you allocate 12GB RAM to the program and have time. I didn’t have time today, so I signed up for Google Cloud Computing like some kind of mad SEO scientist. 🙂

Google Compute Engine

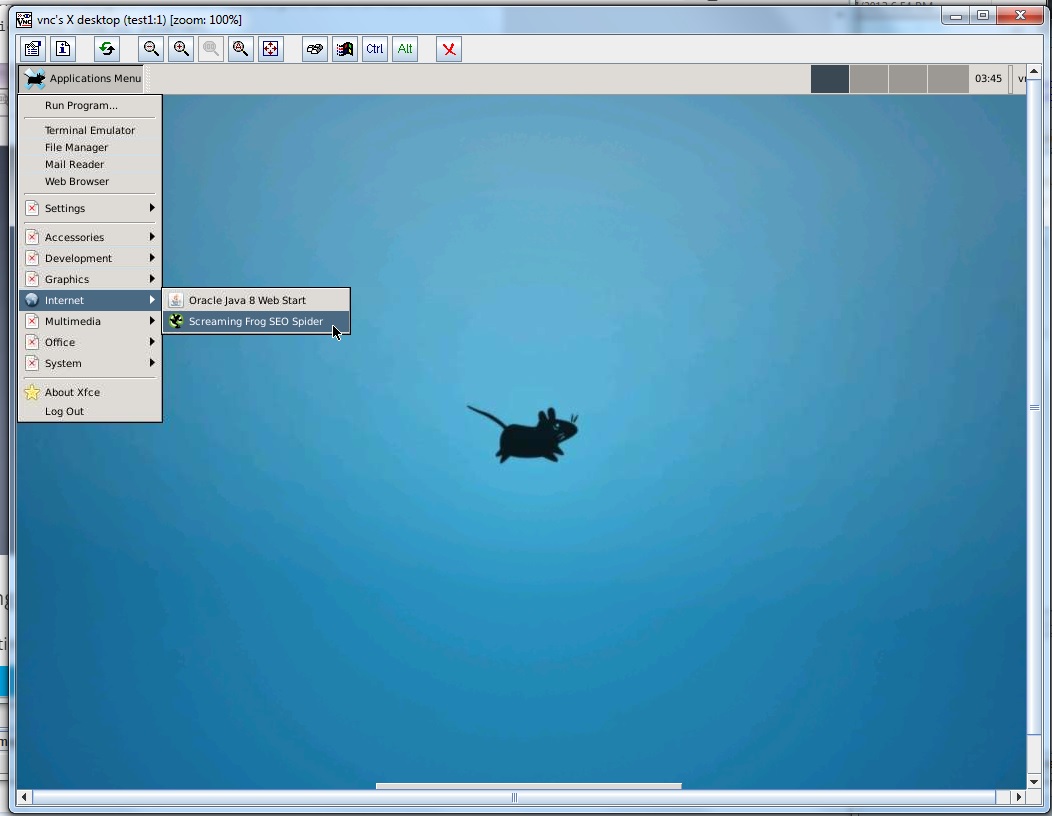

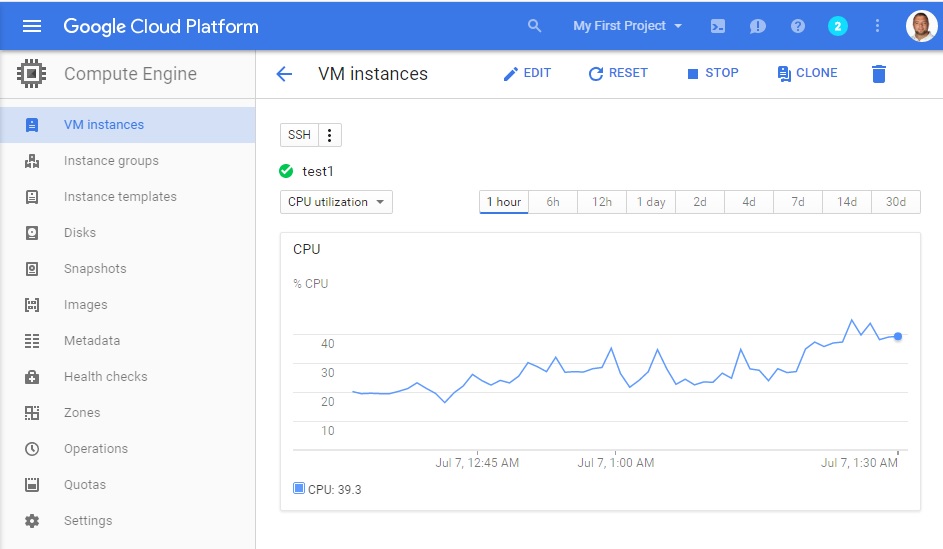

I have an extensive background in computers dating back to 1987 but I haven’t played with VMs (virtual machines) much. Today I got my feet wet in that. Thankfully I have some experience working with Debian and also this guy wrote an epic tutorial on how to run Screaming Frog on Google Cloud. An hour of fiddling around, configuring my VM, changing firewall rules, installing software, creating users and secure shells and what not and bam! I am in via VNC!

Here’s another great article on configuring Screaming Frog to crawl a large website. Basically you need to allocate 12GB to it for 1mil pages and use common sense.

GCE is very cool. Even though I don’t completely understand what I’m doing I’m able to get a few programs installed and running from the command prompt and in the graphical interface I get Screaming Frog up and running. 90 minutes in and I’ve officially discovered 513,000 pages and crawled 94,000 of them. At this point I am comfortable going to bed. I have 1.4million pages left to crawl so at this rate my crawl should be complete in 21 more hours. Not too bad.

I’m not going to elaborate here why, but this crawl was urgent. In the future I should be able to crawl from one of my office machines. I may have to set up a box exclusively for crawling. Or maybe I’ll stick w/ Google. I guess we’ll see.

Sorry in advance

It is extremely rare I put something on this blog without being 100% confident in what I am doing. In this case, I am green, very green. I will be sure to update this post in the future when I crawl a few more large sites and actually know what I’m talking about.

Have you crawled a site with over 1 million pages?

Please feel free to comment below. Why did you? Was the website yours? Which program did you use? What other programs did I forget to mention?

- Google “Pure Spam” Penalty Deindexes Sites March 6 2024 - March 12, 2024

- What Happened to ChicagoNow.com? - August 30, 2022

- The December 2021 Google Local Pack Algorithm Update - December 17, 2021

Leave a Reply